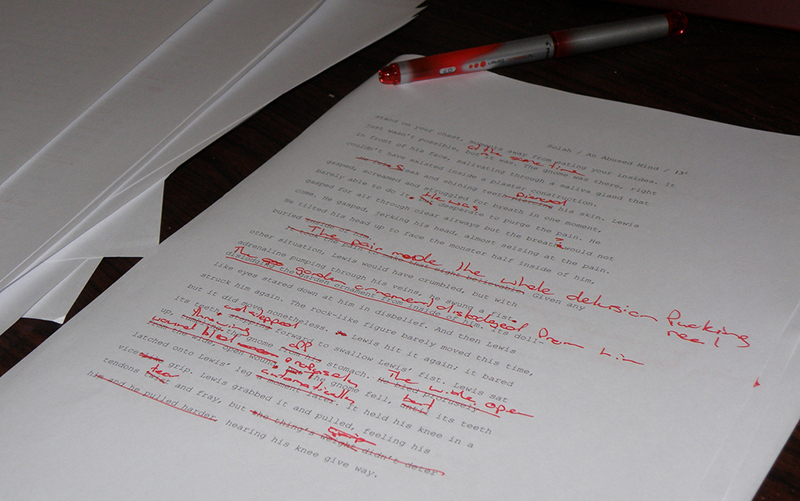

Do apostrophes matter, even though “nothing matters” in the end? German Lopez thinks they do. Is the period “no longer how we finish our sentences”? So says Jeff Guo. If you’re an editor like me, you notice writing about little things like apostrophes and periods, especially when you see it in big news outlets. First, in Vox in January, came “Apostrophes, explained.” Then, in the Washington Post in June, came “Stop. Using. Periods. Period.” Lopez and Guo are on opposite sides in the long-running “language wars” over English usage between prescriptivists, who (as caricatured) believe that nothing is right in usage except what their outmoded rules permit, and descriptivists, who (as caricatured) believe that nothing can ever be wrong. Those wars aren’t news if you follow the story of English, but how the two writers took their positions did seem new. In each article, I saw an oddness and wondered what it might mean about the state of battle.

Most of “Apostrophes, explained” lucidly spells out — and prescribes — the current usage conventions for apostrophes. The rest is an explanation of the explanation. “Why do apostrophes matter?” asks Lopez. “Ultimately they don’t,” he answers, “because nothing matters. . . . But apostrophes do offer a chance to show other people how smart you are.” He continues by saying,

I can’t even begin to tell you how many résumés and cover letters get thrown out because they have very basic grammar and spelling errors. Mastering apostrophes, then, offers a fairly simple way to quickly rise above everyone else. . . . It might not get you hired, but it might get you in the door. Plus, getting it right takes barely any effort once you know how. Just do it.

Does nothing matter? Many things must matter to Lopez, who wouldn’t be who he is if they didn’t. So why would he say that they don’t? And why, if apostrophes do matter, would they matter only because they “offer a chance to show other people how smart you are” and “rise above everyone else”? There are more attractive reasons to follow standard apostrophe usage, which has the same innocent utility as laws requiring people to drive on this or that side of the road. It’s a great service to readers when “you’re” can be counted upon to mean “you are.” But Lopez must have believed that his readers would close their ears to any such argument. Some of them probably resisted the argument he did make. “If anything at all matters,” I imagine them saying to themselves, “why should minutiae matter so much? Why should we do anything merely to please powers-that-be who judge our human worth apostrophe by apostrophe? Wouldn’t life matter more if apostrophes mattered less?” Such reactions would not make anyone likelier to master the use of apostrophes.

Why — despite his brave “Just do it” — was Lopez so cautious? He provides a clue. Not only does nothing matter, he says, but “being a grammar stickler in informal conversation is also pretty gross and generally should be avoided.” He avoids the much-more-frequent “grammar Nazi,” but it comes quickly to mind. Many people seem to believe that such Nazis lurk around every corner, ready to be unpleasant or worse. Just the other day, a cartoon reminded me that everyone knows and disapproves of them. “Sideshow Attractions for Grammar Nazis,” it read. Among the attractions was “a guy YOU’RE going to want to introduce to YOUR parents” (note the apostrophe).

The last time I Googled “grammar Nazi,” I got 1,660,000 hits, including many images of a “G” that looks like a swastika. “Grammarian” drew only 1,230,000, even though grammarians were performing their evils long before Hitler. The Urban Dictionary’s top definition of “grammar Nazi” was “someone who believes it’s their duty to attempt to correct any grammar or spelling mistakes they observe.” Not a very appealing someone. The next definition was “a person who uses proper grammar at all times, esp. online . . . ; a proponent of grammatical correctness. Often one who spells correctly as well.” “Grammar Nazi” seems harsh for this correct speller. Does the punishment fit the crime?

Lopez doesn’t fear or hate grammar Nazis (if they can contain themselves), but he does feel a need to reassure those who do. He’s like Frank L. Cioffi, whose new book One Day in the Life of the English Language begins this way: “Grammar handbook. The words provoke dismay and revulsion in the hearts of millions. The proverbial ugly duckling of textbooks, the grammar handbook might well be the most dreaded yet least read or consulted book people own. But this grammar handbook strives to be different.” Different or not, why must it sidle into the world so timidly?

Now to periods, as considered by Jeff Guo of the Washington Post. His “Stop. Using. Periods. Period.” expands upon an earlier piece in the New York Times, “Period. Full Stop. Point. Whatever It’s Called, It’s Going Out of Style.” Both describe something happening in text messages and social media: the disappearance of most periods and a shift in the meaning of those that remain. But Guo goes further. He begins with an imagined dialogue:

Have you ever watched parents try to text with their children? One hilarious type of misunderstanding goes like this:

Parent: I am waiting for you in the car.

Child: r u mad?

Parent: I am not mad.

Parent: I am telling you I am waiting.

Child: what?????

The poor mom or dad doesn’t understand one of the cardinal rules of texting, which is that you don’t use periods, period. Not unless you want to come off as cold, angry, or passive-aggressive.

“This fascinating development,” says Guo, “has been brewing for at least two decades, ever since kids were logging onto AOL Instant Messenger. The period is no longer how we finish our sentences. In texts and online chats, it has been replaced by the simple line break.” The period is now “an optional mark that adds emphasis — but a nasty, dour sort of emphasis.” It has “essentially become a stylistic device, which . . . recalls the freewheeling origins of Western punctuation.” A quick survey follows, from punctuation-less Greek and Latin texts through medieval manuscripts with occasional “punctus” marks to standardized writing reflecting reformers’ demands that a range of punctuation marks “be placed systematically, in accordance with the underlying structure of a sentence.” But, says Guo,

Linguists have noted that the kind of language that we employ in texts and instant messages . . . bears resemblance to the early days of writing, when manuscripts closely followed how people actually spoke.

Recent trends in punctuation also hark back to that age, when people used punctuation in more liberal and creative ways. The modern line break is like the medieval punctus — an all-purpose piece of punctuation that inserts pauses wherever we’re feeling it. And the period has gained expressive powers after it was laid off from its job marking the ends of sentences. Now it’s an icy flourish we deploy against frenemies and exes.

We should celebrate these developments. Writing is becoming richer. This is an exciting time. Period.

The Times didn’t allow online reactions but the Post did, and 354 commenters rose to the — an angry reader called it “click bait.” Anger was the norm. Although some approved on descriptivist grounds (e.g., “What many proponents of ‘proper’ grammar and punctuation overlook is that our language . . . has evolved and changed”) too many fell into a grammar-Nazi-esque stereotype combining rigidity with disgust for the young. “Not using periods or proper punctuation is a sign of laziness and illiteracy. Period,” complained one. “You can write like a hormone-soaked teen if you want to. I won’t,” objected another. And “haven’t you yet realized,” said a third, “that the self-absorbed, over-valued children of today dictate everything in society?” Such propriety might repel anyone. Some quieter commenters pointed out that, despite Guo’s contentions, the Post had used periods in the standard way. No one commented on the periods in “Stop. Using. Periods. Period.” but I wondered if that headline — revealing the icy fury of whom? — might be a witty copy editor’s revenge.

“Stop. Using. Periods. Period.” does present a fat target, even for the non-furious. One relatively measured comment was “Who in the world wants prose to resemble the way we speak? . . . I love to engage in conversation; I also love to read good writing. But they are two very different things.” The commenter sees a glaring problem: Guo never even hints that there are other kinds of prose than text messages, including his own prose in the Post. He might otherwise have conceded that different contexts invite different customs and expectations. The Times does better: It describes a study in which undergraduates reviewed “16 exchanges, some in text messages, some in handwritten notes, that had one-word affirmative responses (Okay, Sure, Yeah, Yup)” with and without periods. “Those text message with periods were rated as less sincere, the study found, whereas it made no difference in the notes penned by hand.” The Times also manages to speak of the “decline” of the period without declaring it unemployed.

Another commenter sees the glaring irony in such sentences as “The period is no longer how we finish our sentences.” “If this claim is true,” he says, “whenever I use a period, then I am doing something that ‘we’ no longer do . . . If it is descriptive, it is descriptive of a new prescriptive situation which is presented as a fait accompli. I object.” This objector has a point: What — unless it’s “stop using periods” — could be more prescriptive than “we should celebrate these developments”? (And what could be more objectionable to a grammarian, or to anyone else who values making sense, than using the pronoun “we” without a clear antecedent? Who are “we” in this story? Texting teens? Everyone who ever writes English? Such tendentious vagueness is almost worthy of that master language-changer, Donald Trump.)

The oddness in “Apostrophes, explained” was German Lopez’s defensive worry that his well-meant prescriptions would seem so out of place, perhaps even so authoritarian, as to cause offense. The oddness in “Stop. Using. Periods. Period.” is quite opposite. Despite its good cheer, it’s remarkably aggressive. It turns a description of part of English into a prescription for all of it. I suspect that, like Lopez, Guo is a true believer. Lopez believes in conventions, though he fears for them. Guo believes in dismissing conventions — and, it seems, in being on the right side of history.

Lopez and Guo are both recent college graduates. Do they represent the future of English usage? Maybe so, judging by the current state of the language wars. I don’t want to re-fight those wars, since I myself believe that language should be described in depth, that language is constantly changing, that many old prescriptions deserve to fade away, and that any new prescriptions will fail if they don’t acknowledge what language-users actually do. The wars have created a widespread acceptance of these realities, which is a great victory for everyone. But bad feelings and bad ideas remain in the field, with (I believe) bad effects on our power to communicate with each other. A sampling may help to make this point.

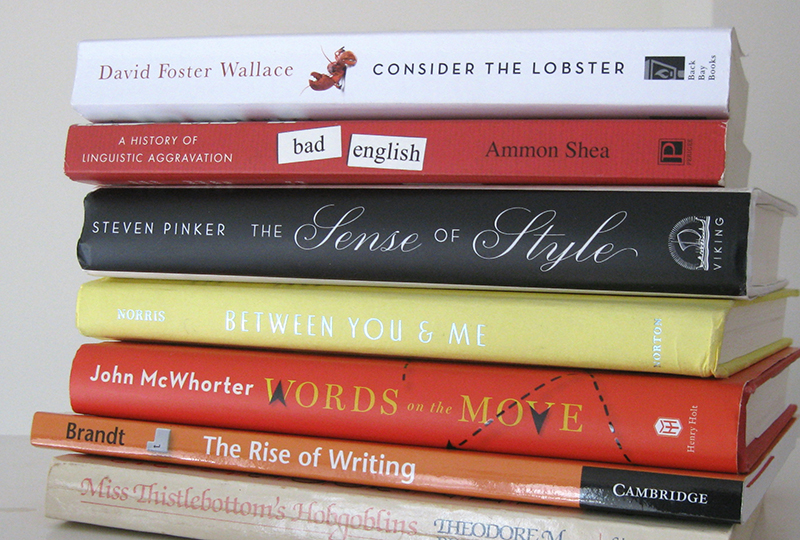

Causing much of the original damage were “conservatives who howled,” as David Foster Wallace called them in Consider the Lobster and Other Essays. They howled in response to a symptomatic event: the appearance in 1961 of Webster’s Third New International Dictionary of the English Language. Webster’s Third admitted many previously shunned words like “OK” and “ain’t” on grounds that “a dictionary should have no truck with artificial notions of correctness or superiority. It should be descriptive and not prescriptive.” Against such ideas rose up what Wallace (a prescriptivist but no caricature) disarmingly called “Popular Prescriptivism, a genre sideline of certain journalists . . . whose bemused irony often masks a Colonel Blimp’s rage at the way the beloved English of their youth is being trashed in the decadent present.” Much of it, he said, “sounds like old men grumbling about the vulgarity of modern mores. And some . . . is offensively small-minded and knuckle-dragging.”

If today’s true grammar Nazis have ancestors, those fuming Colonel Blimps must be among them. Fewer Blimps have prospered lately, but Blimpish grumbling can be found, for example, in Kingsley Amis’s The King’s English, which attacks “rank barbarism” and “the permissive years when nobody dared say in so many words that such-and-such an expression was illiterate or wrong” and in Simon Heffer’s Strictly Speaking, which over-confidently speaks of “the correct — because it is logical — use of English.” In Between You & Me: Confessions of a Comma Queen, the New Yorker’s Mary Norris (no Blimp) includes Strictly Speaking in a list of helpful books but calls Heffer “almost a parody of a British curmudgeon.”

There are still resentments on the descriptivist side, too. In In The Sense of Style, the noted linguist Steven Pinker complains that “the self-proclaimed defenders of high standards have been outdoing themselves with tasteless invective” but nonetheless objects to “purist rants,” “purist bile,” and “pointless purist dudgeon.” If you make the prescribed choice between “which” and “that,” he says, “you’ll be a good boy or girl in the eyes of copy editors.” He pacifically declares that much of his book is “avowedly prescriptivist” and that “there is no such thing as a ‘language war’ between Prescriptivists and Descriptivists.” Yet I wish his book had addressed the major work of Bryan Garner, whose Dictionary of Modern American Usage was famously adored by Wallace when it came out in 1998. Garner’s Modern English Usage (its current title) is in its fourth edition — no doubt thanks to its enviable usefulness, both descriptive and prescriptive. Though Garner never hesitates to identify what currently seems standard or to defend valued forms that seem to be on the way out, he tracks disputed usages’ progress toward acceptance with a “language-change index” and even uses Google n-grams to show their frequency in published English.

I also wish Pinker had not evoked the “dimly remembered lesson[s] from Miss Thistlebottom’s classroom,” but he has an excuse: Theodore Bernstein’s 1971 Miss Thistlebottom’s Hobgoblins, subtitled The Careful Writer’s Guide to the Taboos, Bugbears and Outmoded Rules of English Usage. Bernstein’s book has been deservedly influential, but even setting aside the implicit sexism and the cheap-shot made-up name, his title is neither kind nor fair to the imagined Bertha Thistlebottom, who was trying to do the right thing for her students if in the wrong way. And his book also illustrates a pervasive tension. Bernstein resisted “those who maintain that whatever the people say is just fine” and took great pains to explain how careful writing should be done — but what could be more painlessly thrilling than snark aimed at the “rule mongering sticklers who have tried to squeeze the English language into a set of inflexible rules and outmoded definitions that only serve to stifle its growth and paralyze writers” (as the publisher’s blurb has it)? Jeff Guo’s “punctuation that inserts pauses wherever we’re feeling it” keeps this limber blurb-writer alive and kicking.

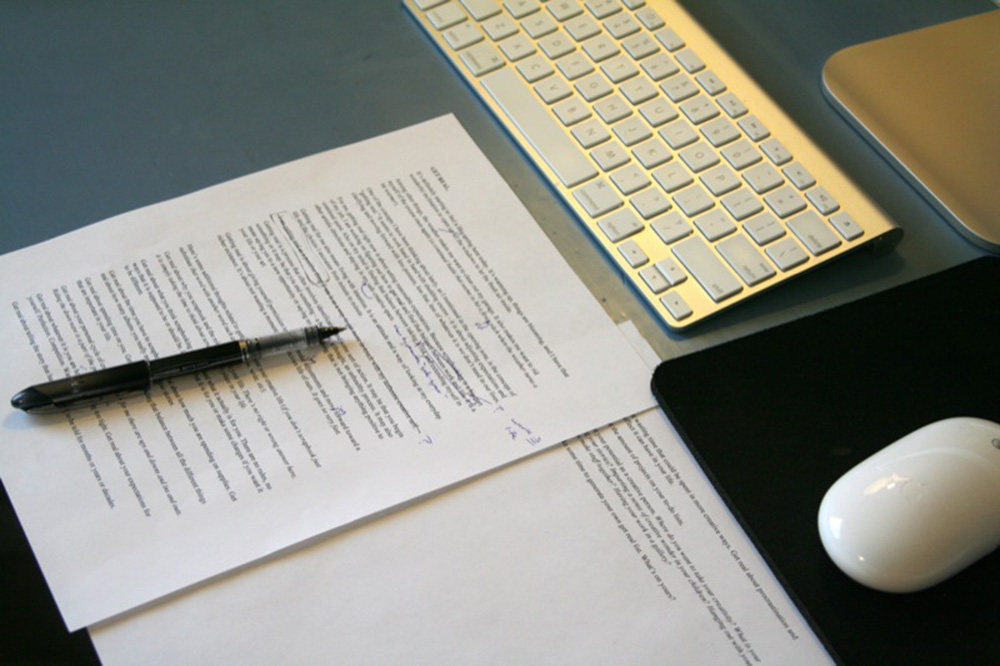

Here I should admit that I’m a teacher myself. I work for a university both as an editor and writer and also as a teacher of editing. An often-exemplary textbook I use in my classes, Amy Einsohns’s The Copyeditor’s Handbook, is caught in the same bind as Miss Thistlebottom’s Hobgoblins. Einsohn deprecates John Dryden for proclaiming that “sentences were no longer to end with prepositions because Cicero and his brethren did not do so” (you’d never know that Dryden was a great writer), and she really takes wing when rejecting the “dozens of rules that were never really rules, just the personal preferences or prejudices of someone bold enough to proclaim them to be rules.” But nonetheless, her book is full of rules — page upon page of them. They’re contemporary rules, and many of them seem well-founded or at least well-described. But when you’ve so vigorously condemned the rules those dopes made in the past, how seriously should anyone take the rules you yourself make in the present? Or anyone else’s rules?

Miss Thistlebottom and her predecessors continue to take a beating. Another recent book on Mary Norris’s list — Bad English: A History of Linguistic Aggravation, by Ammon Shea — does almost nothing but expose the dopes. Shea gives seven chapters, including “221 Words That Were Once Frowned Upon,” to “standards of usage that we hold dear and use to make ourselves feel better about our language (and worse about the language of others).” “People who hold themselves up as language purists” seldom escape: they “seem to spend considerably more time complaining about language than they do celebrating it.” They’re “unlikely to be swayed by an argument that is based on ‘If it’s good enough for Shakespeare it’s good enough for me.’” That empty argument (X has been done, so it’s fine to do X) keeps turning up, trying to sway readers as if it should be decisive. After all, wasn’t slavery good enough for Jefferson?

Shea’s attitudes are in the air, often in the form of unflattering psychological portraits (such as “language pedants are more likely to be introverted and disagreeable”). But his attacks are extreme. More often I come upon upbeat and ostensibly nonjudgmental descriptivist linguists who seem largely to have succeeded the howling conservatives and closet Blimps of the last century. One latter-day go-to pundit is Geoff Nunberg, who is quoted and re-quoted in the Times and Post articles on disappearing periods. When the singular, gender-neutral “they” (as in Jane Austen’s “everybody has their failing”) was named word of the year, he offered important history on NPR: Although many writers had used forms of “they,” he said, “the Victorian grammarians made it a matter of schoolroom dogma that one could only say ‘Everybody has his failing,’” a rule that “wasn’t really discredited until the 1970s, when . . . feminists made the generic masculine the paradigm of sexism in language.”

Although Nunberg sees no problem in the singular “they,” he dutifully reported that the Chicago Manual of Style “provides nine different strategies for achieving gender-neutrality without having to resort to using ‘they.’” He himself would rather not “make some pedant’s morning.” “If I could have back all the time I’ve wasted writing my way around a perfectly grammatical singular ‘they,’” he said, “I could have added another book or two to my name.”

He could also have asked himself why the Chicago Manual — which has long been revered for practically addressing difficulties — seems so ready to waste everyone’s time. He ignores a structural problem that surely concerns the Chicagoans: “they” can’t be “perfectly grammatical” when it might stand for something plural and simultaneously for something else singular. I too favor the singular “they,” but I wish we’d respect a conflicting imperative and take seriously the various pronoun-reference issues that “they” can create. There’s a better way to say such things as “a writer should be careful not to antagonize readers because their credibility will suffer.” Whose credibility was that? Chicago’s proposed write-around is “because the writer’s credibility will suffer.”

Here are more examples of descriptivist commentary, all involving long and heated controversies. The first is Anne Curzan on “literally vs. figuratively.” “Do remember that words change meaning over time,” she says. “The argument that this newer shift in the meaning of literally is illegitimate because a word cannot mean its opposite does not hold up given the other examples in English (e.g., dust, cleave, sanction). So I am here to assure you that the fact that people are using literally to mean both literally and figuratively does not mean that anyone is literally or figuratively killing the English language.” Another linguist, John McWhorter, chimes in: “The fact that literally can mean both itself and its opposite is — admit it — cool! The way literally now works is a quirky, chance development that makes one quietly proud to speak a language.” Similar, though tarter, is the online Merriam-Webster dictionary’s treatment of “disinterested,” which gets three definitions: 1a, “not having the mind or feelings engaged,” 1b, “no longer interested,” and 2, “free from selfish motive or interest.” In the ensuing “usage discussion,” Merriam-Webster reports that the once-established sense 2 “shows no signs of vanishing” (I disagree) but adds that “senses 1a and 1b will incur the disapproval of some who may not fully appreciate the history of this word or the subtleties of its present use.”

Such observations invite me — almost require me — not to get so bent out of shape when I dissent. It’s hard for me to admire them. If you’re a writer or an editor, and it’s your job and desire to communicate well in writing, words with conflicting meanings can give you fits, especially when one meaning is threatening another. If you want to use “literally” to mean “not figuratively” instead of “figuratively” (or “amazingly” or whatever), it hurts when you realize that many readers won’t understand you. You’ve lost an efficient way to say something that might be important. If you want to use “disinterested” to mean “free from selfish motive or interest,” and your readers think you mean “uninterested,” as they probably will, you’re in a jam. When a word like “disinterested” puffs up and floats away, an important idea floats with it, and you can’t celebrate. What particularly hurts both writers and readers in each of these cases is that no new expression has come forward to adequately fill the place of the disappearing one.

“Literally” and “disinterested” illustrate important truths. First, the tools of a language are common property. Anyone can use them, well or badly. Good usage will be imitated, and so will bad. When a tool is used well by one person, the next person will have a better chance of using it well too. When a tool is used badly, it may not work as well as it once did, whoever uses it next. Second, there are times when we need precise writing, but when tools like those words are broken by misuse, writing precisely gets harder. A third truth, forced on me again and again, is that no one can write, edit, or teach in isolation. If you want to use a language to any effect, enough readers must agree with you about the meanings of the words and patterns with which you try to express nuances and intricacies.

Among my students, colleagues, and other readers are many people with highly varied experiences of English. My urban, public university’s multilingual, multi-educational state of being is representative and healthy — healthy not least because it is representative — and I like it that way. But if you’re approaching such an audience, how do you get across tricky facts and ideas when so many different Englishes might influence perceptions of the English you’re trying to construct or improve? You can’t assume that “standard written English” is everyone’s common ground, and yet whatever you say must be intelligible to the largest possible readership. You yearn to find or create agreement in your readers’ minds about the workings of an English that can be meaningful, truthful, and equal to irreducible complexities.

Many published writers and editors feel this need, of course, whatever genre they’re working in. So also do many people who use words for a living without being publicly identified or even being considered writers at all. Their writing affects countless lives every day, a reality made less obscure by a recent book, Judith Brandt’s The Rise of Writing. “Millions of Americans,” says Brandt, “now engage in creating, processing, and managing written communications as a major aspect of their work. It is not unusual for many American adults to spend 50 percent or more of the workday with their hands on keyboards and their minds on audiences.” For her book, she interviewed 60 of them, including a mortgage broker, a historical society librarian, an internet entrepreneur, a corporate manager, a legislative aide, a clinical social worker, a police detective, a farm manager, a nurse, a scientist, an insurance industry lobbyist — you get the idea.

Brandt’s book couldn’t be more interesting, but I want to draw upon it to make just one point. All the writing these millions of people do requires a stable, agreed-upon formal English. Their writing will — or won’t — help to make the world go round, and how well they write matters a lot. We shouldn’t be so taken with other modes of communication that we forget this. We need people who can write not just clear news stories but also clear insurance policies and police reports — and we need people who can read them, or if necessary see through them despite their opacity. Every day, usually without meaning to, people produce writing that should be better, and every day other people (or the same people) are disappointed, fooled, or even betrayed by it. They may be entertained, but they may not be well-served, when disruptive usage issues are pleasantly described but not usefully resolved.

It’s the job of everyone who works with words to make writing better. By “better,” I don’t mean “conforming to outmoded standards,” I mean “effectively and honestly conveying complex facts and thoughts.” Please let me testify that it’s impossible to do that without durable and nourishing ideas of what “better” is. Without such ideas and ways to realize them, how could I ever decide what to accept or change? How could I explain my choices, first to myself and then to others? How, when time, money, and perhaps tempers are short, could I achieve a single better sentence instead of all those not-so-good alternatives? How could I coherently multiply those better sentences over pages or over years? I couldn’t, and neither could anyone else. So whenever ideas that can make writing better seem to need defending, or ideas that can make writing worse seem to gain ground, I worry.

English isn’t going to hell in a handbasket, but I do suspect that understanding of standard written English — versus casually spoken, texted, and tweeted English — is shrinking. Reversing that trend is desirable, but is it also possible despite the headwinds? I think so. In any case, English has had long periods of regularizing and refining, thanks to such advocates as the sometimes wrong but hardly stupid John Dryden and the author of the grammar book Abraham Lincoln once walked six miles to obtain. Let’s include Lincoln himself among the better angels of our language.

Language-change is inevitable, but it can be influenced as well as observed. How might it best be influenced? In my heaven, first of all, everyone would agree that English-users urgently need standard written English — and speech sharing its precision — when trying to address difficult matters with suitable care. We need a trustworthy public English that will make sense, because it’s shared among many audiences. We need it for ordinary life: It’s no fun to waste hours “chatting” with a technician when your internet service won’t work because the cable company sent you lousy instructions. We need it for medicine, science, education, business, journalism, fiction, criticism — for so many ways of trying to communicate about human experience. We need it for the law, which can’t fulfill its purposes without the medium of a messy language that has nonetheless been made as clean and clear as possible. God knows we need it in politics (just think about the recent campaign fate of the words “honest” and “lie”). And we need to maintain it, just as we need to maintain our health and transportation systems. If it isn’t working well, we need to fix it.

What most needs fixing, and how would I fix it? First, I’d encourage actively good judgments — in our schools and daily lives — about expressions like “literally” and “disinterested.” The meanings of those two words have already disintegrated through a language-change process that merits conscious recognition. It’s the opposite of what happens when something new and distinct emerges, such as the deliciously self-accusing, self-forgiving “selfie.” Over time, the definite sense of a new or settled usage dissipates as readers who don’t quite understand it jump to conclusions. “Literally” looks like an intensifier, so it becomes one. Context seems to invite “disinterested” to mean “uninterested” and, without enough opposition, eventually it does.

Such destructive progressions are built into the way language is used, but we don’t have to lie back and enjoy them. Take “home in” and “hone in,” which are still fighting for the same territory. “Hone in” seems to be winning, but wherever I can, I’ll argue not only that “home in” is original but also that it’s just plain better: “to home” is to approach a point, while “to hone” is to sharpen an edge. “Set foot” similarly beats “step foot.” How can you step a foot? And why say “foot” when you’ve already said “step”? Here’s a similar usage problem that has a particularly bad effect on public discourse. Almost every day I hear “make the case” on radio news. Sometimes it seems narrowly intended to mean what it means in the law world: a made case is a well-constructed argument. At other times, though, it can mean little more than “assert.” If you say that someone made a case, and your hearer thinks that the case was persuasive, but all you really mean is that someone made a noise, you have not made the world a better place.

Even more important is disciplined attention to the structures and forms necessary for complex thought — that is, grammar in the largest sense. I’d particularly stress singular and plural ideas affecting agreement between subjects and verbs, pronouns and antecedents. Disciplined attention wouldn’t be easy since justifiable doubts of the adequacy of conventional grammar as a descriptive system prevail among linguists, who have developed yet more complicated systems to replace it. Those doubts, together with compelling pedagogical and political trends, have fed reluctance to use grammatical ideas in American teaching, from which those ideas have tended to recede. “Grammar” can seem hostile to teaching, if not also an aspect of a crushing control exercised by the privileged. But nonetheless, I think we need to renew our ways of employing it.

Screwed-up subject-verb relationships usually reflect too little grasp of English’s ways of expressing relative importance, as in “the closed-mindedness in these comments are astounding” (a response to the responses to “Stop. Using. Periods. Period.”). What is astounding, the comments or the closed-mindedness? Another example from my ballooning collection: “When the stakes in a case go up, the number of women litigators decline.” What declines, the number or the litigators? Another: “The awarding of contracts to Pearson in Kentucky and Virginia illustrate the problem.” The awarding illustrates the problem, not the contracts or the states. Another: “The clarity of his lightning-speed runs are jaw-dropping.” The clarity is jaw-dropping. Maybe the runs are jaw-dropping in themselves, but why then bring in “clarity”?

The difficulty here is two-fold: a subject is separated from its verb, and something potentially muddying gets between them. The singular “clarity” is what makes jaws drop, but the plural “runs” is closer to the verb, which becomes “are” when it should be “is.” Those runs are secondary to “clarity.” The “of” in “of his lightning-speed runs” should have told the writer that, but it didn’t. The writer didn’t recognize a pattern that normally works well, however unconsciously: “the roof of the house is red” means that the roof is red but not necessarily the rest of the house. Nourishing recognition of such patterns could help many writers avoid much nonsense in the first place.

Internet-driven reading habits — or internet-driven layoffs — may contribute to these confusions. So may blurrily expanding grammatical concepts. Amy Einsohn, for example, endorses the “notional” pluralizing in “a host of competitive offers have been received.” Why use and then immediately drop a singular idea? But blurry concepts are a smaller problem than no concepts. Too many of my intelligent and engaged students are quite at sea when they’re asked why, in the following sentence about fracking, “open” should be “opens” and “release” should be “releases.”

In these tighter rock formations, workers must first drill into underground shale beds, and then essentially saturate the area with a high-pressure cocktail of water, sand, and chemicals that open up the shale deposits and release the gas.

What is doing the opening and releasing here? The chemicals? The water, sand, and chemicals? No, a “high-pressure cocktail” with those ingredients is. The pronoun “that” is the subject of the verbs. It could stand for the plural neighbors preceding it, but instead it stands for the singular “cocktail.” The “of” phrase that follows “cocktail” is subordinate to “cocktail.” Singular verb forms and a sense of subordination would help send a reader back to “cocktail,” but many of my students seem not to have encountered such concepts when they were younger. Some are so lost that they think the subject of the verbs is “rock formations” or “shale beds.”

Ultimately the issue is not whether people can define “subject,” “verb,” and “pronoun.” It’s whether their minds can be clear when they read or write a sentence — whether they are able to know what is doing what in it. How can anyone understand fracking without understanding what releases the gas? How can anyone explain fracking, or anything similarly complicated, without multi-part sentences?

I’ve picked an example whose meaning turns on a pronoun because pronouns, like inflected verb forms, are critical when we want to make ideas hang together. Knowing how pronouns work — that is, how they’re supposed to work — can prevent no end of trouble, especially when what they stand for isn’t right at hand. Barack Obama was spectacularly a victim of his own bad pronoun reference during the 2012 campaign, when he spoke at a rally about “this unbelievable American system . . . that allowed you to thrive.” “Somebody invested in roads and bridges,” he went on, and then he opened the door: “If you’ve got a business” — he paused for a nanosecond — “you didn’t build that. Somebody else made that happen.” His opponents were all over him in a flash. “How can anyone dare to say that you didn’t build your own business,” they cried. By “that,” Obama meant the roads and bridges, indeed the whole American system, but there was “business” three words away from “that,” allowing his sentence to fit the enemy narrative to perfection. His mismanaged “that” echoed and re-echoed until election day.

In good writing, little things mean a lot — hence my readiness to dwell on “we,” “of,” and “that.” But these little things are part of a bigger idea. The language wars were worth fighting, but they are now so ugly and unproductive that they should end. The way to end them is not to embrace all language change simply because language changes. Instead we should begin to focus deliberately — with full awareness of the ways language changes — on our obligations to our own language as a kind of society. Everyone who knows a word of English participates in this society, affecting it to one extent or another. It’s almost as complicated and fluid as the human society that uses it. Like that society, it includes countless sub-societies, and every English user belongs to several of them. But among all the Englishes, with their overlapping customs and memberships, standard written English remains central and necessary.

Like a society of people, standard written English can get better or worse. When a troublesome usage is imitated, it doesn’t help when authorities defend the usage, on almost moral grounds, merely because it exists through a natural process. A friend of mine once came upon some picnickers in a park tossing orange peels onto the grass. He’s always been direct, but he’s also deeply and acutely peaceable — a committed Buddhist, for example. In his forthright way, he complained about the orange peels, but the picnickers were righteously taken aback: “They’re biodegradable!” was the reply. Perhaps my friend deserved to be condemned as an orange-peel Nazi, but I doubt it. He was trying to improve a society. In a healthy society, efforts toward improvement aren’t always applauded, and they may be foolish or even wicked, but at least no one contends that such efforts are bad in themselves. In my English heaven, trying to make the language more orderly would be no less acceptable than trying to clean up trash.

But should anyone try to calcify and then idolize an English of the past? No, of course not. We need a flexible and explicitly intellectual approach, reflecting agreement on facts and ends if not means. The conventions of standard written English should be like a living Constitution struggling toward a “more perfect union,” not like a tribal Constitutionalism. But we shouldn’t minimize the value of precedent. If our standard written English changes slowly enough, we will understand our ancestors better, and our children will understand us better. So will new arrivals in the present. I’ll never forget a foreign student who unhappily agreed when I called English a “moving target.” Her English was quite fluent, thanks in part to a translatable education in grammar, but she still faltered at times. At my university, similar difficulties afflict many local students whose roots are abroad. They all deserve to be welcomed into the society of English, where they are needed. A stable formal English with distinct roads and borders would be especially helpful to people who are new to it, but of course it would also be helpful to everyone else. Maintaining it would even justify some fussing and heel-dragging.

How would we maintain standard written English? In our schools, homes, and workplaces we’d do everything possible to nourish a larger, deeper, more systematically accessible idea of what it is — not an occasion for oppressive nitpicking but instead for making distinctions, perceiving relationships, and thus coming to terms with realities. We’d value information about our language, but without embarrassment we’d also embrace the need to prefer some expressions over their less-defensible rivals. We’d re-admit the study of grammar, if not the worst grammar Nazis. We’d look for adequately basic ways to start younger students on a good path, and we wouldn’t insult their teachers.

How would we view “the rules”? We’d be ready to change them, but we’d also respect them as sources of clarity, even of honesty. We might picture ourselves at a crosswalk next to a mother and child waiting patiently for the light to change. Even if we were habitual jaywalkers, we’d help that mother set an example for her child. We’d also understand that rule-breakers need rules to break: Readers of good experimental writing can feel the envelope it pushes against. How would we resist using standard written English as no more than an imposition of privilege? We’d have to work very hard, especially if we enjoy privilege ourselves, to see the differences between standards and barricades. We’d have to question our own motives — but without paralyzing ourselves. My greatest teacher once said “I have so wished, all my life, to act from a single motive.” He knew that he couldn’t. If an action seems good in intent and probable result, perhaps an imperfect motive for it can accompany the more appealing ones.

And finally, in my heaven there would be no more editors — well, not many more professional editors. Not because no one could afford to employ them, but because education and conscience had combined to make good amateur editors out of nearly everyone. Being a good editor does sometimes require shooting down bad writing, but mostly it requires figuring out what a writer wants to say and helping the writer say it. All of us would have that obligation. When we saw something like “if you’ve got a business, you didn’t build that,” we’d want to help. We might even help our enemies. We’d look hard for the right antecedent for each loose “that” or “we.” If we could find no antecedent, and especially if context implied a destructive antecedent, we’d fight back hard. But with luck we’d find a good one and connect it to its pronoun. Whenever we could, we’d use the rules of our English society to make our human society better for all the fellow-citizens we share it with. •

Images courtesy of the author, ali edwards, Amy Aletheia Cahill, Margo Terc, Steve Rhodes, David Short, Shelly, Chrispl57, bsolah, BookMama, and Thomas Hawk via Flickr (Creative Commons)